When inpainting with Stable Diffusion, it is imperative to use inpainting models instead of base or fine-tuned models. These models are specifically trained to understand and reconstruct parts of an image, leading to more accurate inpainting results.

During their training, inpainting models are exposed not only to a set of complete images but also to images with missing parts. This process teaches the model how to handle the absence of image sections effectively.

This approach is elaborated on in the model card for the Stable Diffusion 1.5 inpainting checkpoint:

The Stable-Diffusion-Inpainting was initialized with the weights of the Stable-Diffusion-v-1-2. First, there were 595k steps of regular training, followed by 440k steps of inpainting training at resolution 512×512 on “laion-aesthetics v2 5+” and 10% dropping of the text-conditioning to improve classifier-free guidance sampling. For inpainting, the UNet has 5 additional input channels (4 for the encoded masked image and 1 for the mask itself), whose weights were zero-initialized after restoring the non-inpainting checkpoint. During training, we generate synthetic masks and in 25% of cases mask everything.

https://huggingface.co/runwayml/stable-diffusion-inpainting

In short, the results you’ll get when using these models are far superior and will save you time when compared to using a base or fine-tuned model.

Note: Despite being trained on partial images, inpainting models are nearly identical in size to the generation model. This similarity is due to the inpainting model being trained on the same images as the generation model, but with parts of those images missing.

Do All Fine-Tuned Models Have Inpainting Models Available?

Not always.

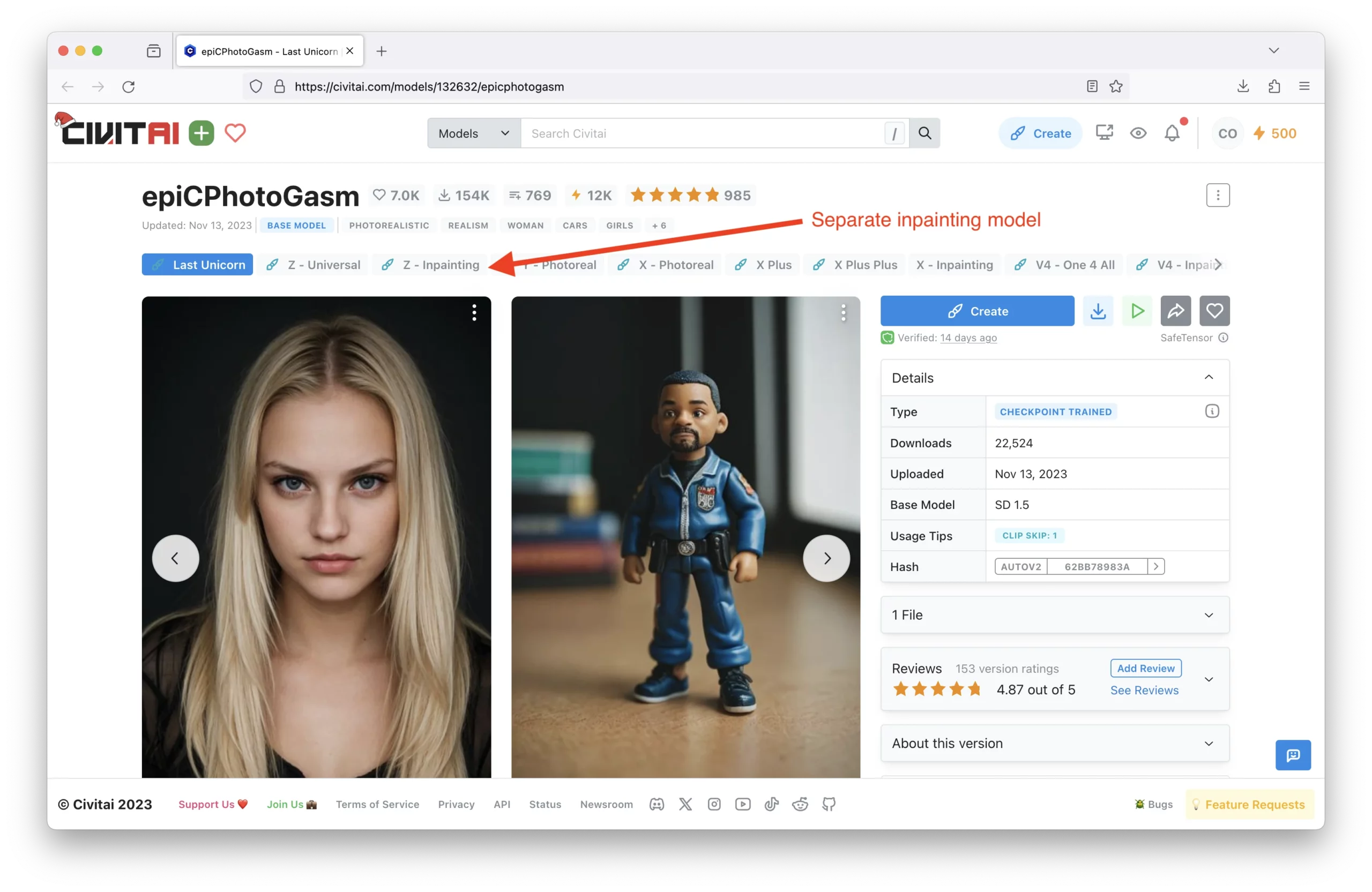

It’s up to the creators of the model to conduct separate training sessions for the inpainting model. A survey of various models on Civitai reveals mixed results. Popular checkpoints like majicMIX realistic and epiCPhotoGasm have separate inpainting models for most of their releases:

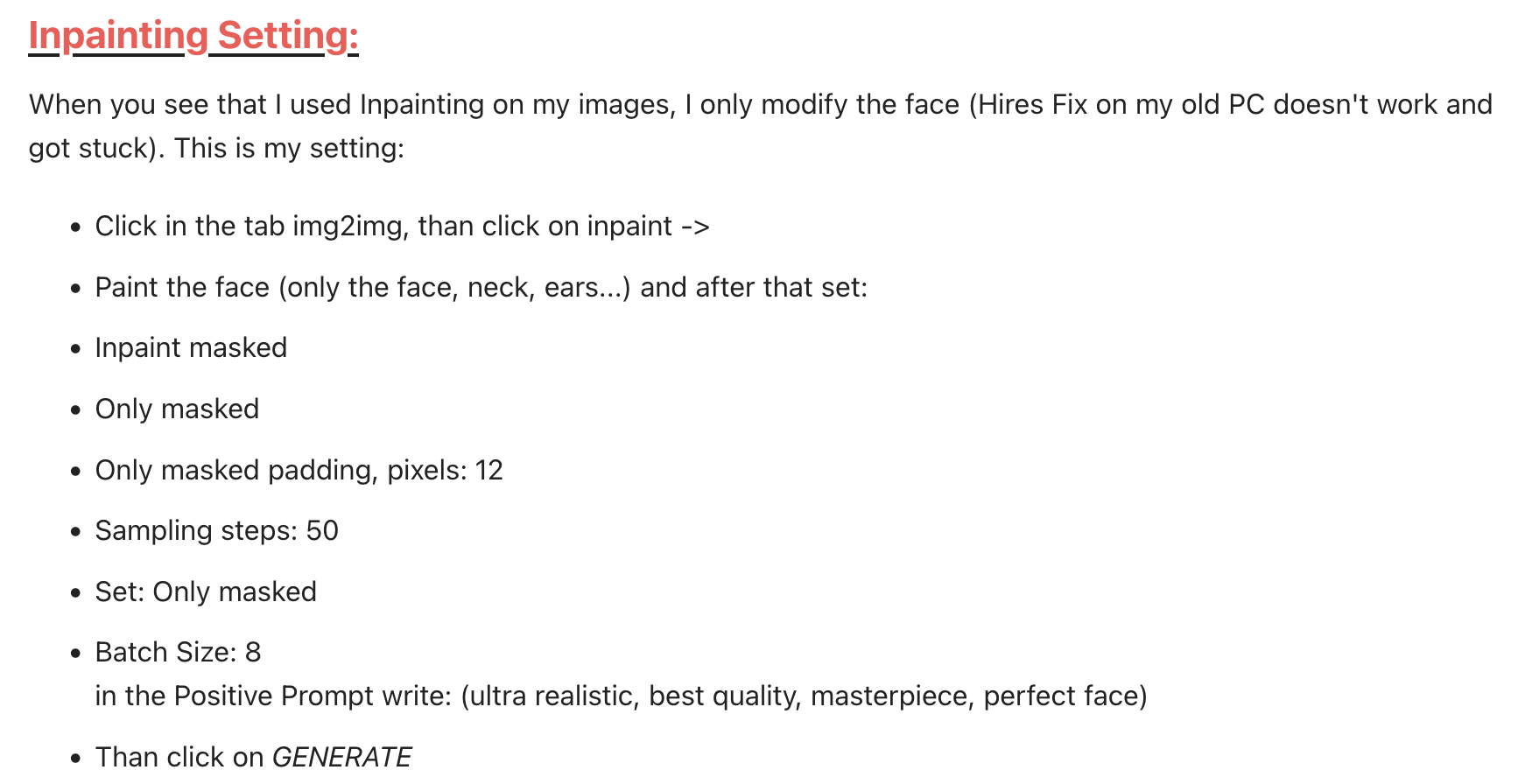

However, other popular models such as Aniverse or RealCartoon3D either release an inpainting model once every few releases, or they do not release an inpainting model at all. Instead, they offer specific guidance on how to use their model for inpainting:

When No Inpainting Model is Available

You have two options if no inpainting model is available:

Option 1: Use an off-the-shelf inpainting model that was trained on the same base model.

Many models are fine-tuned using an existing Stable Diffusion model (e.g., 1.5, XL, etc.). Therefore, it’s possible to use the inpainting model from the base model if an inpainting model is not available for the fine-tuned model you’re using.

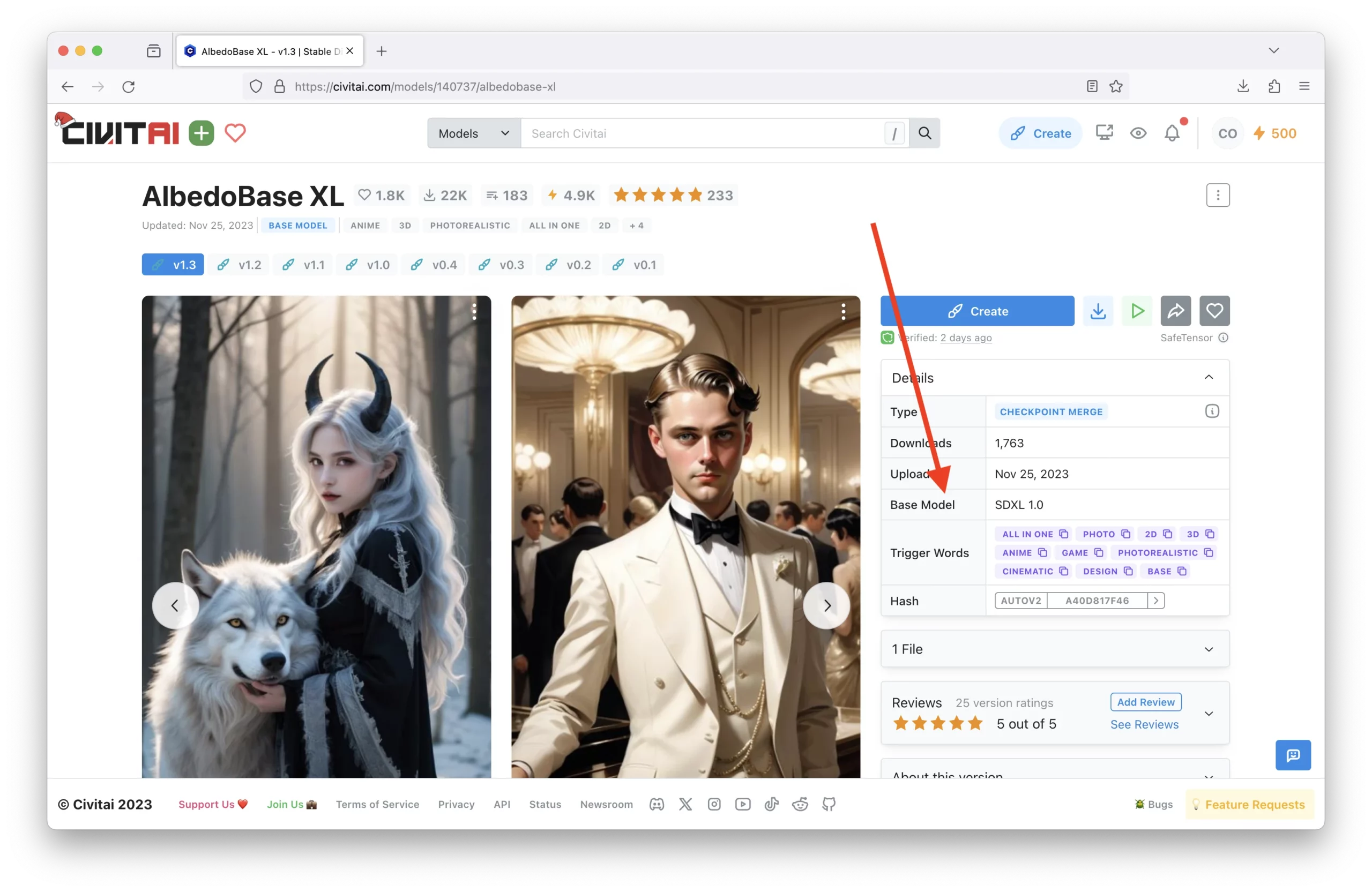

To determine the base model used for fine-tuning, you can look at the model card. On the right, you’ll find the ‘Base Model’:

Here are links to the base inpainting models for each Stable Diffusion version:

Option 2: Train your own inpainting model

This is surprisingly easy to do. Thanks to Reddit’s /u/MindInTheDigits, they provided an excellent tutorial on how to train your own inpainting model. You can find the tutorial here.

Simply put, you can train your own inpainting model by mashing up the base model, base inpainting model, and your own fine-tuned model.

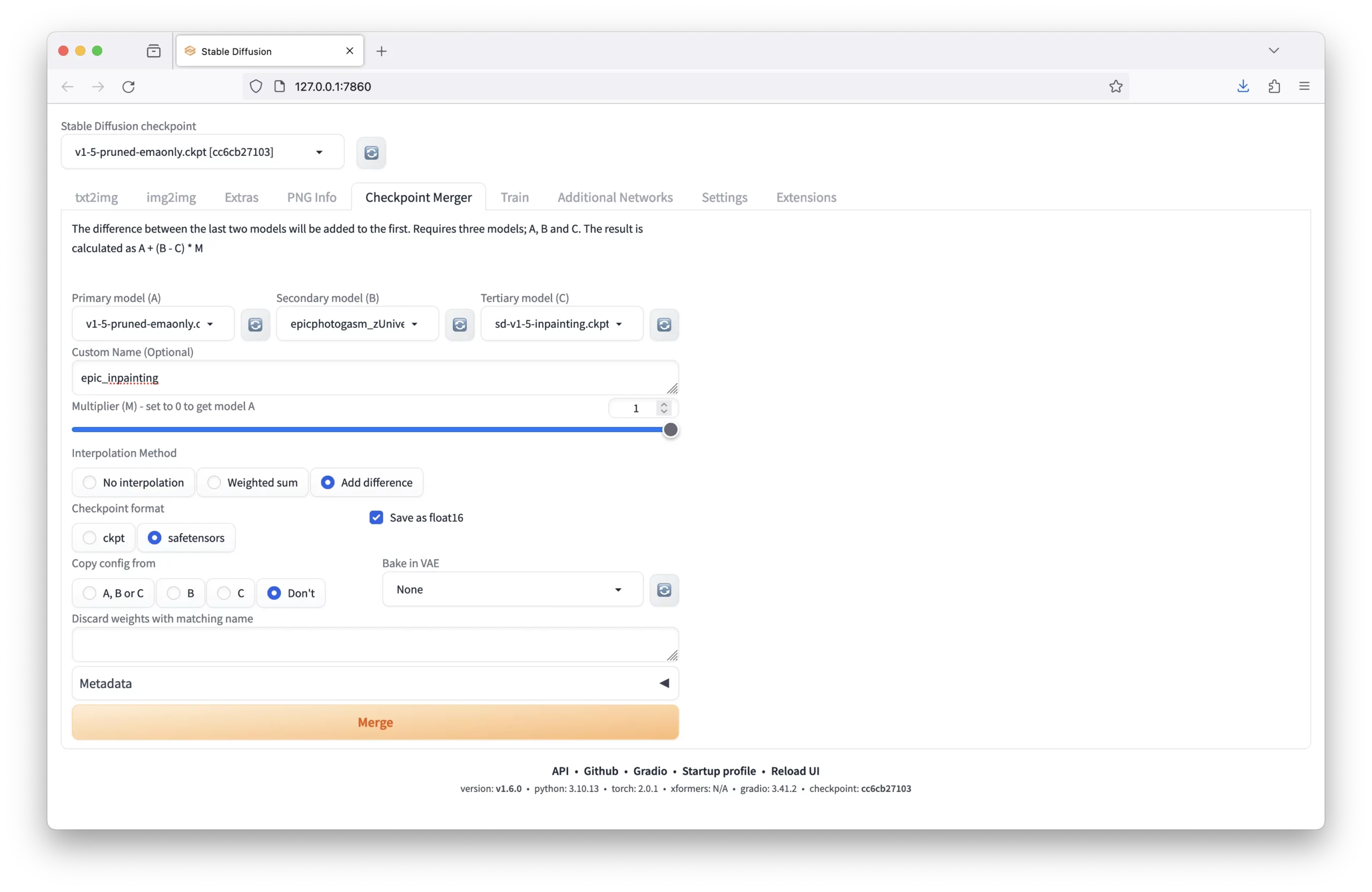

To make your own fine-tuned model in Automatic 1111, you can go to the ‘Checkpoint Merger’ tab:

Set the models and parameters as follows:

- Primary Model: Inpainting Base Model

- Secondary Model: Fine-Tuned Model

- Tertiary Model: Base Model

- Custom Name: Your choice (model + _inpainting is recommended)

- Multiplier: 1.0

- Interpolation Method: Add difference. The math behind this is A + (B – C) * M, where A is the inpainting base model, B is the fine-tuned model, C is the base model, and M is the multiplier. This will create a new inpainting model that carries over all the logic of the inpainting base model along with the important information from the base and fine-tuned model.

- Save as Float16: True

- Checkpoint Format: .safetensors

- Copy config: Don’t

Then click ‘Merge’.

Avoid Using Fine-Tuned Models for Inpainting

Using a fine-tuned model for inpainting can result in suboptimal outcomes. Common issues when employing a fine-tuned model for inpainting include:

- Halos around the inpainted area

- The inpainted area not matching the color of the surrounding image

- Artifacts appearing in the inpainted area

Using the Inpainting Model

If you require guidance on inpainting, we have composed an entire post on the subject. In that article, we explore various parameters that can be adjusted and explain their functions to help you achieve the best results possible.