What You'll Learn

Learn how seeds control the starting point of image generation in Stable Diffusion, and discover how to reuse them to create variations, blend results, or reproduce images with subtle prompt changes.

Video Walkthrough

Prefer watching to reading? Follow along with a step-by-step video guide.

Seed Values Decoded: Elevate Your Stable Diffusion Techniques

A seed is a pseudo-random number that provides a starting point for a Stable Diffusion model to begin generating an image.

The seed value can be anywhere between 0 and 232 (4,294,967,296) and will either be set automatically by the interface you are using to generate images (e.g., Automatic 1111 will use -1 to randomly generate a seed) or predefined by you.

Want to see how seeds work? Watch our video that walks you through the nuances.

How Seeds Affect Image Generation

All images generated from a Stable Diffusion model are the result of a series of steps and noise. The first image in the generation process is a noisy image that is akin to TV static that you may have seen on an old television set.

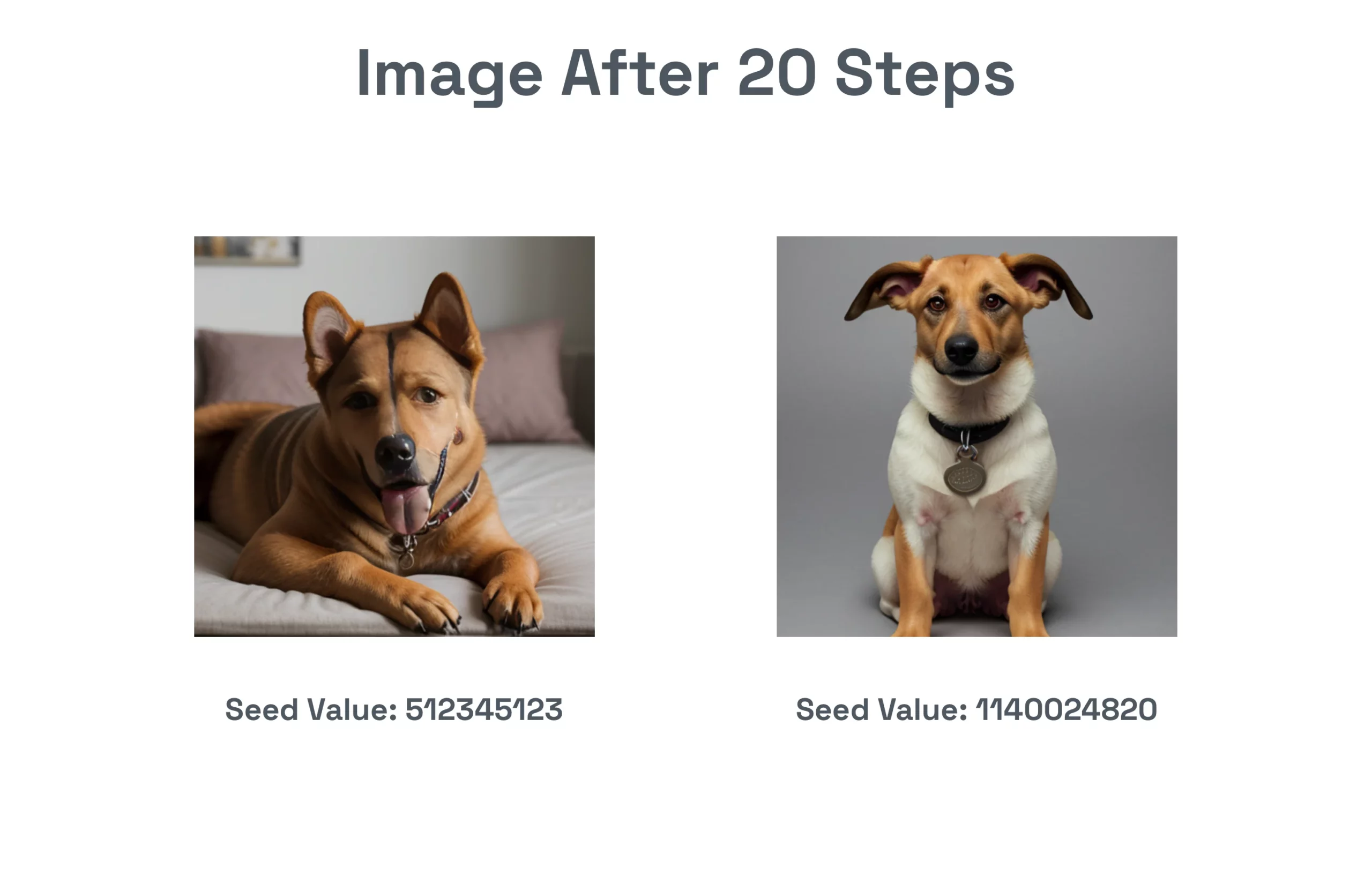

As an example, here are two different images that contain the same prompt, dimensions, and other parameters, but have different seed values:

While they may not appear all that different to our eyes, to the computer, they are drastically different images. Here are the same images 20 steps later:

While they may not appear all that different to our eyes, to the computer, they are drastically different images. Here are the same images 20 steps later:

So what is going on here?

So what is going on here?

When the diffusion model generates an image, it gradually reduces the noise step-by-step until it reaches the final image.

As we stated at the start, the seed helps to define the initial noise and the samplers work in steps to gradually remove the noise.

Pretty cool!

Do Close Seeds Produce Similar Images?

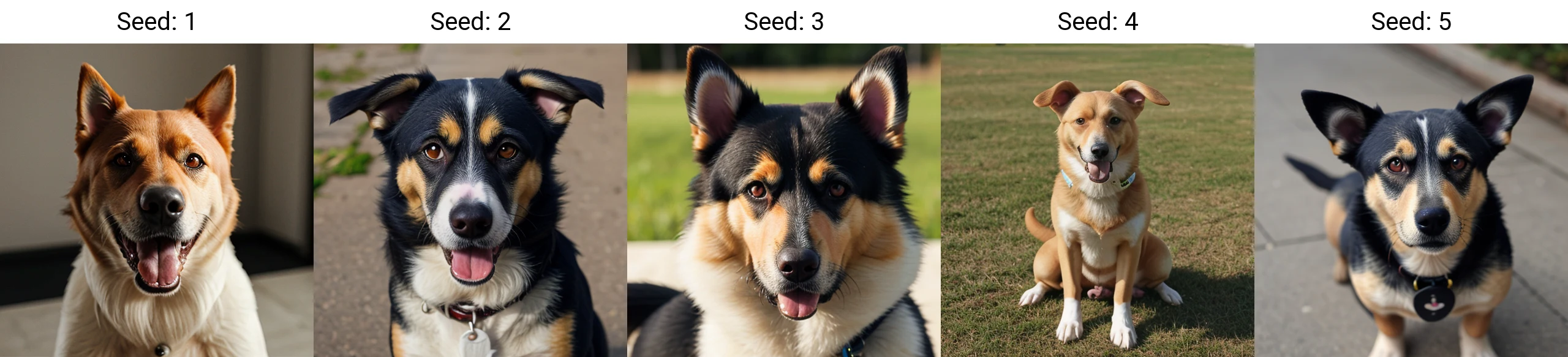

There is no apparent correlation between the seed value and the final image. Going back to our basic prompt of ‘dog’ and setting the seeds from 1 to 5 using the handy XYZ Plot script, we can see that the images are all very different:

Any similarities between dogs may be due to a smaller dataset that the model was trained upon.

Any similarities between dogs may be due to a smaller dataset that the model was trained upon.

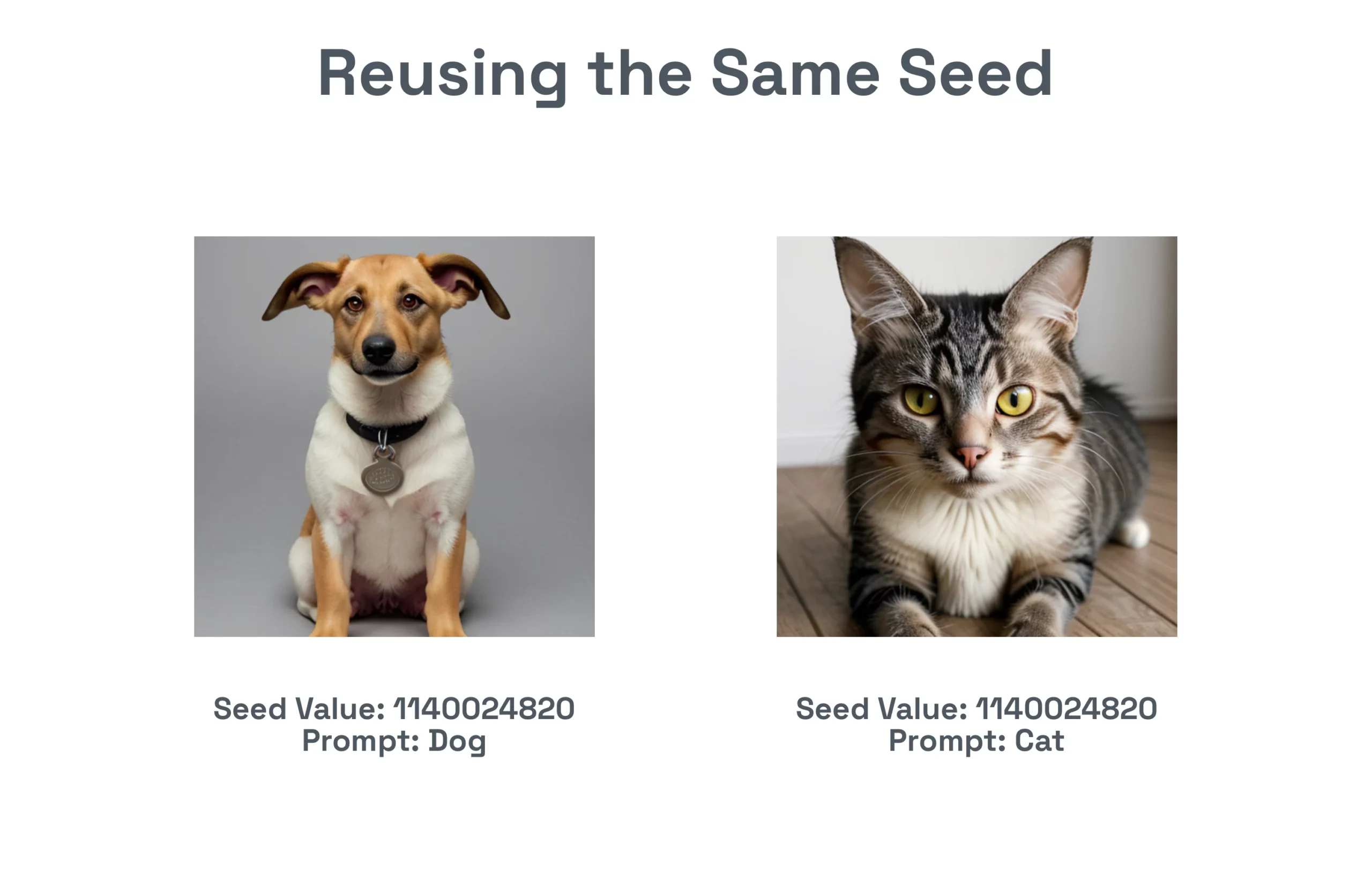

But what about the same seed and a different prompt?

For example, earlier we used a dog and the seed value of 1140024820. If we reuse that seed value and put ‘cat’ we may notice some similarities in coloring.

However, this method isn’t a reliable way to make consistent images of different subjects as what you provide in your prompt will guide the image generation process accordingly.

However, this method isn’t a reliable way to make consistent images of different subjects as what you provide in your prompt will guide the image generation process accordingly.

How to Use Seeds

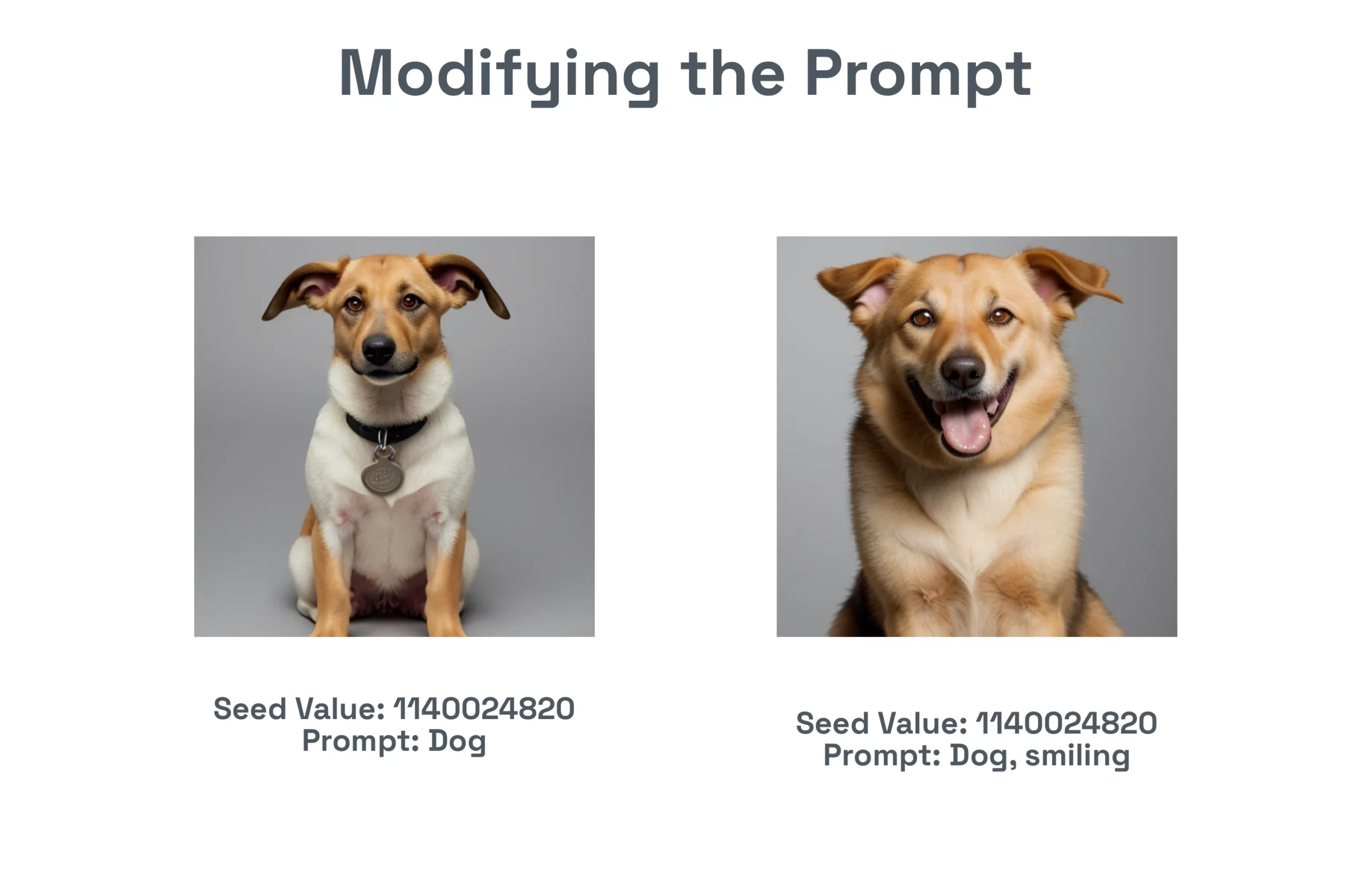

Seeds are most helpful if you want to generate the same image again and make subtle changes to the prompt, characteristics, etc.

That should be taken with caution, as completely adding new elements to the prompt may produce a completely different image.

For example if we go back to our dog example, if we compare ‘dog’ and ‘dog, smiling’ we get fairly close representations – although as anyone could tell you these are two different dogs:

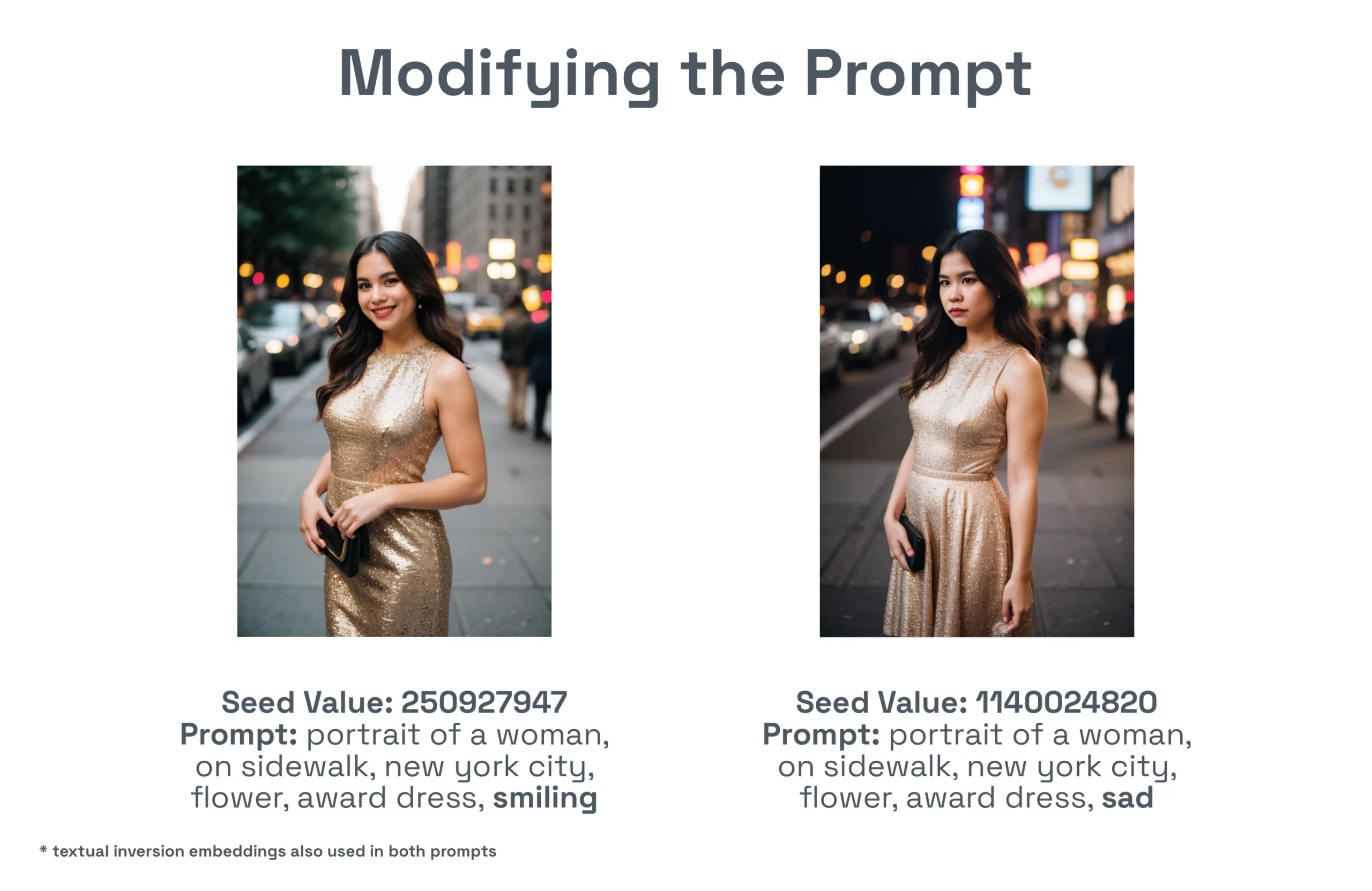

Instead, if you have a very long prompt and you want to change something like the season/weather (rainy, sunny, snowy) or an expression (sad, happy), then reusing the same seed might make sense:

Instead, if you have a very long prompt and you want to change something like the season/weather (rainy, sunny, snowy) or an expression (sad, happy), then reusing the same seed might make sense:

If you wanted to control other features of the image you could define things like hair length, time of day, etc. in your initial prompt as well.

If you wanted to control other features of the image you could define things like hair length, time of day, etc. in your initial prompt as well.

Note: Using this method to make subtle changes is inefficient. Instead, you should consider using img2img or inpainting.

Blending Seeds

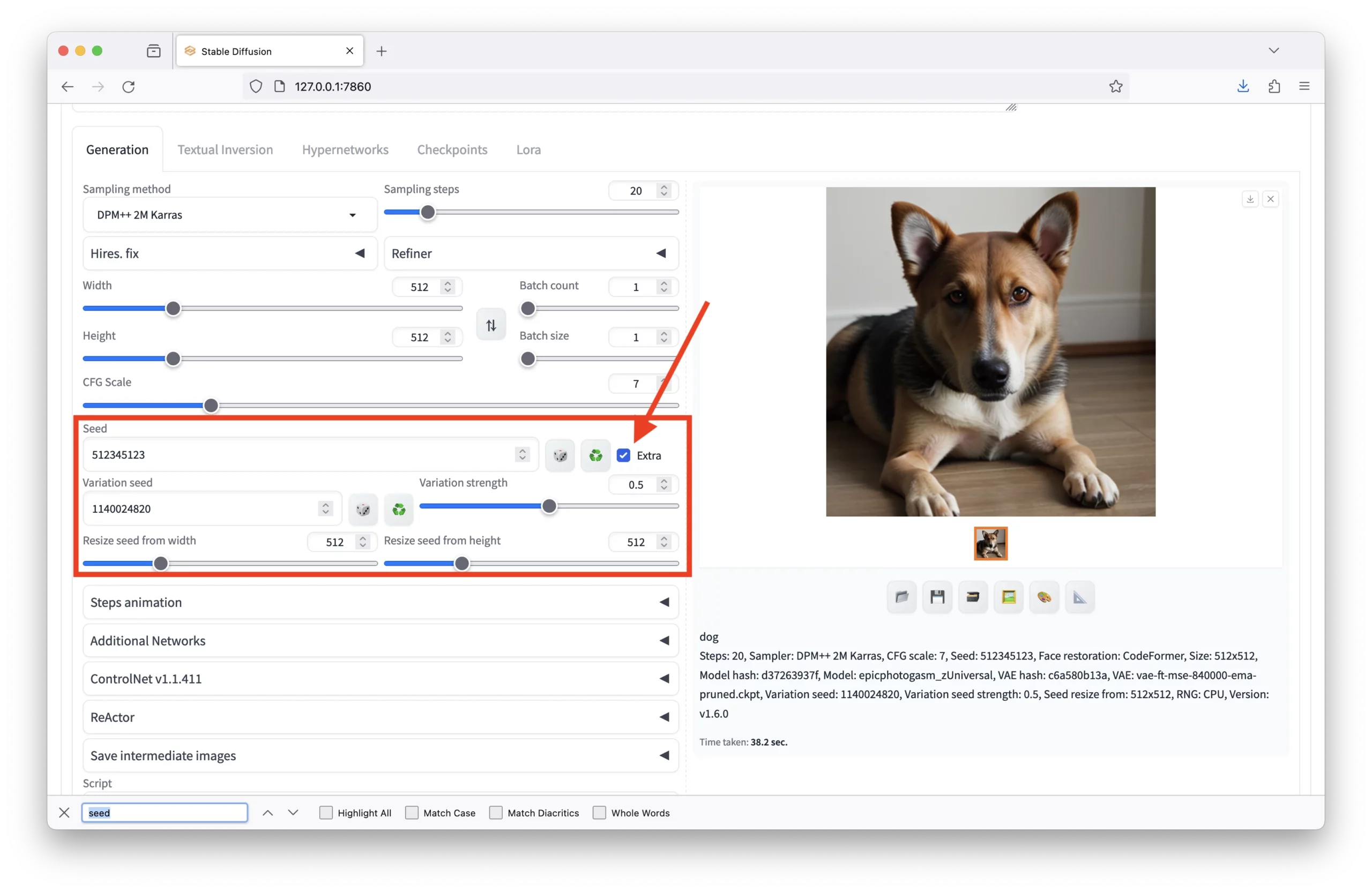

What if you like the characteristics of two completely different seeds? If so, tools like Automatic 1111 offer a way to blend seeds together. Simply click on the ‘Extra’ checkbox and enter the seeds you want to blend together:

You can define the variation strength, or how strongly you want to blend the new seed with the original seed, along with the width and height.

You can define the variation strength, or how strongly you want to blend the new seed with the original seed, along with the width and height.

Here’s what the example of our two dogs looks like over a gradual increase in variation strength:

Very cool!

Very cool!

We can see features like pose, background color, and coloring of the dog itself come together between the images.

How Image Size Affects Seeds

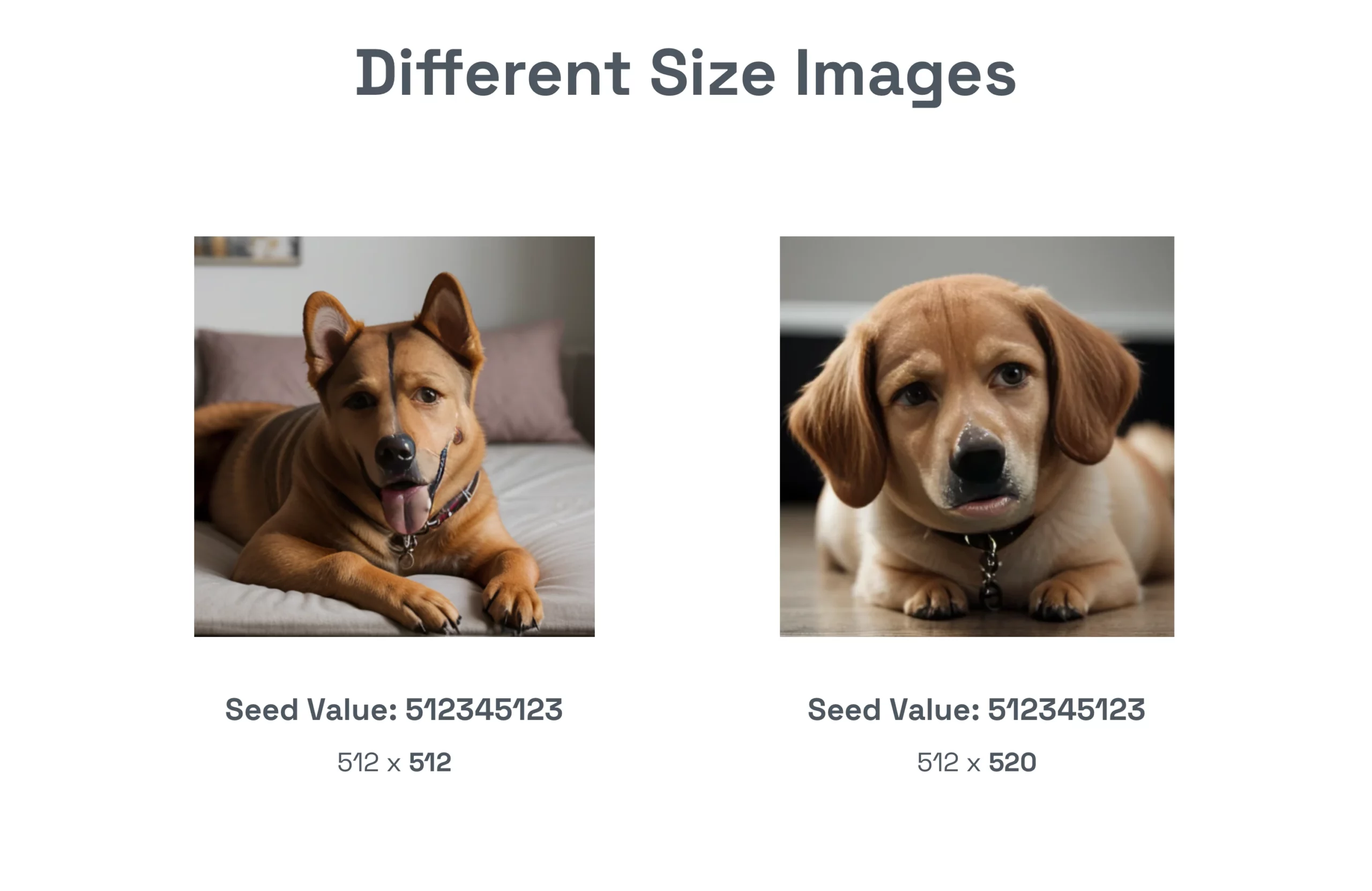

If you want to generate the same image but at a different size, unfortunately, setting the seed and then changing the size will not work, as two completely different noisy images will be generated.

Even if you make the scale of the image one pixel larger or smaller, the image will be completely different.

See how 8 more pixels in height changes the image completely:

Let’s just ignore the quality of the images for a minute 😉

Let’s just ignore the quality of the images for a minute 😉

Instead, you would want to rescale the image by sending it to the Extra tab or use Hires fix. and set your preferred scaling method.

Controlling Randomness

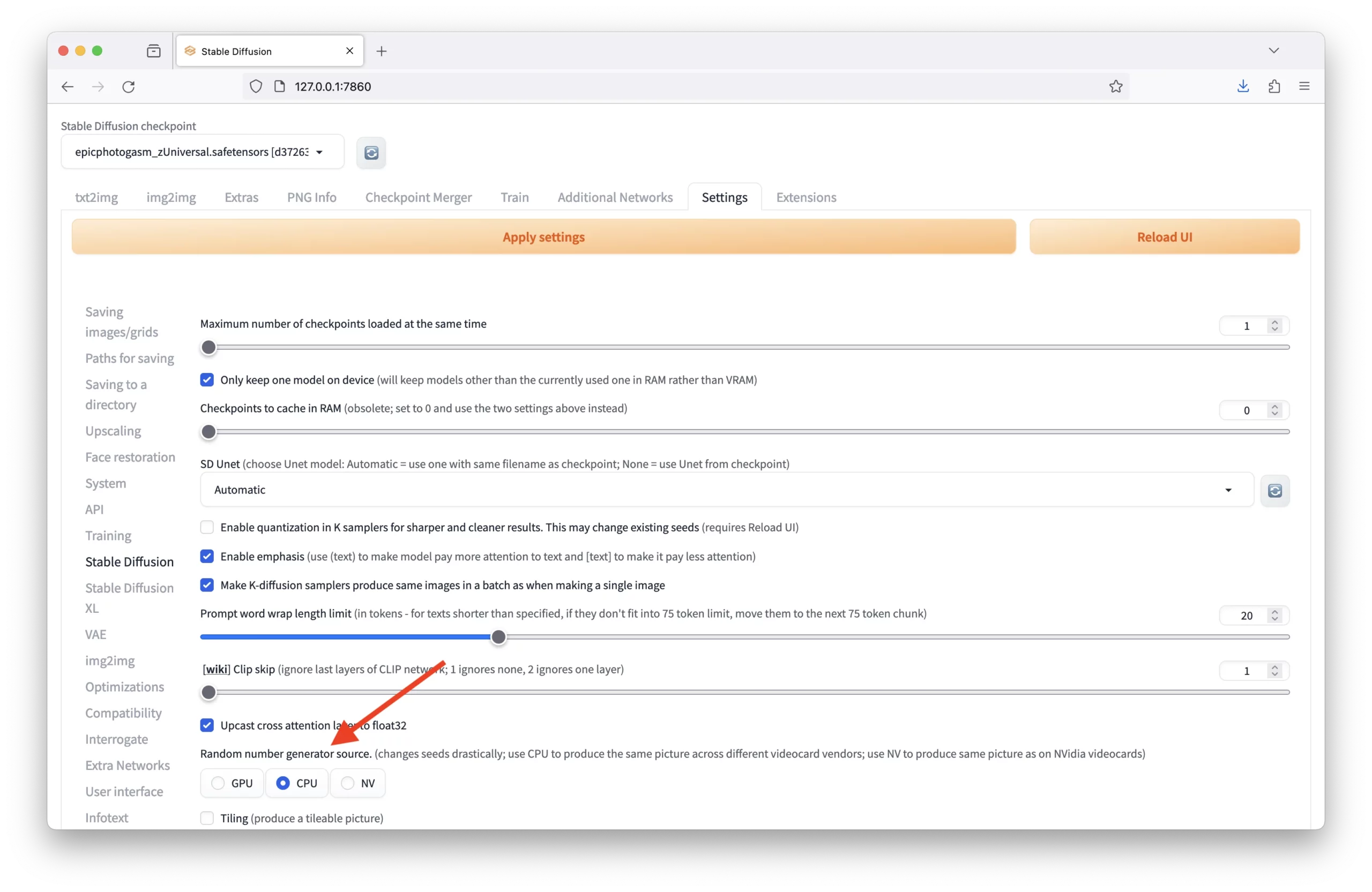

Within the Settings > Stable Diffusion > Random number generator source of the Automatic 1111 WebUI, you can set the random number generator source to CPU, GPU, or NV:

So what does this mean?

So what does this mean?

This setting relates to the underlying randomness source used in the generation process. Computers generate pseudo-random numbers for tasks like image generation in a Stable Diffusion model. This pseudo-randomness is deterministic, meaning the same seed will always produce the same sequence of numbers in a given random number generation algorithm.

However, the way these pseudo-random numbers are generated can differ based on the hardware or software implementation, which can lead to variations in outcomes if not controlled.

By setting the random number generator source, you can ensure that the diffusion process is consistent across different environments. The ‘CPU’ option ensures that the random numbers are generated in a way that is consistent across different types of CPUs, regardless of the brand or model. This means that the same seed should produce the same image on any computer using a CPU from any vendor. In contrast, the ‘NV’ setting is specific to NVIDIA GPUs, which is helpful if you are aiming for consistency in image output on NVIDIA hardware specifically.

The reason for this option is that different computational platforms may utilize different algorithms or optimizations for random number generation, which could potentially affect the outcome.

Want More AI Image Tutorials?

Get the best AI image tutorials and tool reviews—no spam, just 1 or 2 helpful emails a month.

Continue Learning

More How It Works Tutorials

Explore additional tutorials in the How It Works category.

View All Tutorials